チュートリアル:プラグインエフェクトのカスケード接続

AudioProcessorGraphを使用してオーディオプロセッサやプラグインをデイジーチェーン接続する方法を学び、独自のチャンネルストリップを作成します。プラグインとスタンドアロンアプリケーションの両方のコンテキストでAudioProcessorGraphを使用する方法を学びます。

レベル: 中級

プラットフォーム: Windows, macOS, Linux

クラス: AudioProcessor, AudioProcessorPlayer, AudioProcessorGraph, AudioProcessorGraph::AudioGraphIOProcessor, AudioProcessorGraph::Node, AudioDeviceManager

はじめに

このチュートリアルのデモプロジェクトをこちらからダウンロードしてください:PIP | ZIP。プロジェクトを解凍し、最初のヘッダファイルをProjucerで開いてください。

この手順でサポートが必要な場合は、チュートリアル:Projucer Part 1: Projucerを始めようを参照してください。

デモプロジェクト

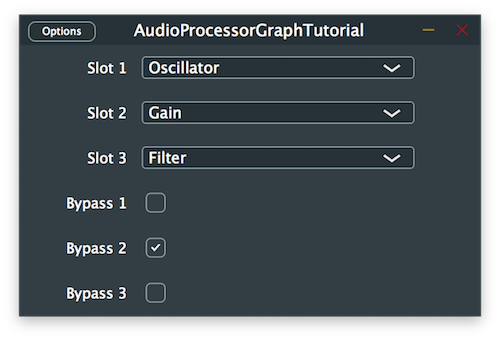

デモプロジェクトは、異なるオーディオプロセッサを直列に適用できるチャンネルストリップをシミュレートします。個別にバイパスできる3つのスロットがあり、オシレーター、ゲインコントロール、フィルターの3つの異なるプロセッサから選択できます。プラグインは入力オーディオに処理を適用し、変更された信号を出力に伝播します。

AudioProcessorGraphのセットアップ

AudioProcessorGraphは、複数のAudioProcessorオブジェクトをグラフ内のノードとして接続し、組み合わされた処理の結果を再生できる特別なタイプのAudioProcessorです。グラフノードを一緒にワイヤリングするには、オーディオ信号を処理したい順序でノードのチャンネル間の接続を追加する必要があります。

AudioProcessorGraphクラスは、グラフ内のオーディオおよびMIDI信号の入出力処理用の特別なノードタイプも提供します。チャンネルストリップのグラフ例は、正しく接続されると次のようになります:

入力信号を受信し、処理せずに対応する出力に送り返すためのメインのAudioProcessorGraphをセットアップすることから始めましょう。

このチュートリアルで頻繁に使用されるネストされたクラスの文字数を減らすために、まずTutorialProcessorクラスでAudioGraphIOProcessorクラスとNodeクラスのusingを宣言します:

using AudioGraphIOProcessor = juce::AudioProcessorGraph::AudioGraphIOProcessor;

using Node = juce::AudioProcessorGraph::Node;

次に、クラス名の短縮版を使用して、以下のプライベートメンバー変数を宣言します:

std::unique_ptr<juce::AudioProcessorGraph> mainProcessor;

Node::Ptr audioInputNode;

Node::Ptr audioOutputNode;

Node::Ptr midiInputNode;

Node::Ptr midiOutputNode;

ここでは、メインのAudioProcessorGraphへのポインタと、後でグラフ内でインスタンス化される入出力プロセッサノードを作成します。

次に、TutorialProcessorコンストラクタで、プラグインのデフォルトバスプロパティを設定し、メインのAudioProcessorGraphをインスタンス化します:

TutorialProcessor()

: AudioProcessor (BusesProperties().withInput ("Input", juce::AudioChannelSet::stereo(), true).withOutput ("Output", juce::AudioChannelSet::stereo(), true)),

mainProcessor (new juce::AudioProcessorGraph()),

プラグインを扱っているため、プラグインホストまたはDAWにどのチャンネルセットをサポートしているかを通知するためにisBusesLayoutSupported()コールバックを実装する必要があります。この例では、モノ・ツー・モノとステレオ・ツー・ステレオの設定のみをサポートすることにします:

bool isBusesLayoutSupported (const BusesLayout& layouts) const override

{

if (layouts.getMainInputChannelSet() == juce::AudioChannelSet::disabled()

|| layouts.getMainOutputChannelSet() == juce::AudioChannelSet::disabled())

return false;

if (layouts.getMainOutputChannelSet() != juce::AudioChannelSet::mono()

&& layouts.getMainOutputChannelSet() != juce::AudioChannelSet::stereo())

return false;

return layouts.getMainInputChannelSet() == layouts.getMainOutputChannelSet();

}

バスレイアウトについてさらに学びたい場合は、チュートリアル:プラグインの正しいバスレイアウトの設定を参照してください。

TutorialProcessorが提供されたグラフを通じてオーディオを処理できるようにするには、信号処理を実行するAudioProcessorクラスの3つの主要な関数、つまりprepareToPlay()、releaseResources()、processBlock()関数をオーバーライドし、AudioProcessorGraphの同じそれぞれの関数を呼び出す必要があります。

prepareToPlay()関数から始めましょう。まず、setPlayConfigDetails()関数を呼び出して、I/Oチャンネル数、サンプルレート、ブロックあたりのサンプル数をAudioProcessorGraphに通知します:

void prepareToPlay (double sampleRate, int samplesPerBlock) override

{

mainProcessor->setPlayConfigDetails (getMainBusNumInputChannels(),

getMainBusNumOutputChannels(),

sampleRate,

samplesPerBlock);

mainProcessor->prepareToPlay (sampleRate, samplesPerBlock);

initialiseGraph();

}

次に、同じ情報でAudioProcessorGraphのprepareToPlay()関数を呼び出し、後で定義するinitialiseGraph()ヘルパー関数を呼び出してグラフ内のノードを作成および接続します。

releaseResources()関数は自明で、単にAudioProcessorGraphインスタンスの同じ関数を呼び出します:

void releaseResources() override

{

mainProcessor->releaseResources();

}

最後にprocessBlock()関数では、念のためガベージデータを含む可能性のある追加チャンネルに含まれるサンプルをクリアし、チャンネルストリップの設定が変更された場合にグラフを再構築する後で定義するupdateGraph()ヘルパー関数を呼び出します。関数の最後でAudioProcessorGraphのprocessBlock()関数が最終的に呼び出されます:

void processBlock (juce::AudioSampleBuffer& buffer, juce::MidiBuffer& midiMessages) override

{

for (int i = getTotalNumInputChannels(); i < getTotalNumOutputChannels(); ++i)

buffer.clear (i, 0, buffer.getNumSamples());

updateGraph();

mainProcessor->processBlock (buffer, midiMessages);

}

prepareToPlay()コールバックで先に呼び出されたinitialiseGraph()関数は、まず以前に存在していたノードと接続をAudioProcessorGraphからクリアすることから始めます。これにより、グラフ内の削除されたノードに関連付けられた対応するAudioProcessorインスタンスの削除も処理されます。次に、グラフI/O用のAudioGraphIOProcessorオブジェクトをインスタンス化し、AudioProcessorオブジェクトをグラフ内のノードとして追加します。

void initialiseGraph()

{

mainProcessor->clear();

audioInputNode = mainProcessor->addNode (std::make_unique<AudioGraphIOProcessor> (AudioGraphIOProcessor::audioInputNode));

audioOutputNode = mainProcessor->addNode (std::make_unique<AudioGraphIOProcessor> (AudioGraphIOProcessor::audioOutputNode));

midiInputNode = mainProcessor->addNode (std::make_unique<AudioGraphIOProcessor> (AudioGraphIOProcessor::midiInputNode));

midiOutputNode = mainProcessor->addNode (std::make_unique<AudioGraphIOProcessor> (AudioGraphIOProcessor::midiOutputNode));

connectAudioNodes();

connectMidiNodes();

}

オーディオ/MIDIデータを伝播するために、グラフ内の新しく作成されたノード間に接続を追加する必要があり、これは以下のヘルパー関数で実行されます:

void connectAudioNodes()

{

for (int channel = 0; channel < 2; ++channel)

mainProcessor->addConnection ({ { audioInputNode->nodeID, channel },

{ audioOutputNode->nodeID, channel } });

}

ここでは、Connectionオブジェクトの形式で接続したいソースノードと宛先ノードを渡すことで、AudioProcessorGraphインスタンスのaddConnection()関数を呼び出します。これらは適切な接続を構築するためにnodeIDとチャンネルインデックスが必要で、このプロセス全体が必要なすべてのチャンネルに対して繰り返されます。

void connectMidiNodes()

{

mainProcessor->addConnection ({ { midiInputNode->nodeID, juce::AudioProcessorGraph::midiChannelIndex },

{ midiOutputNode->nodeID, juce::AudioProcessorGraph::midiChannelIndex } });

}

MIDI I/Oノードでも同じことが行われますが、チャンネルインデックス引数は例外です。MIDI信号は通常のオーディオチャンネルを介して送信されないため、AudioProcessorGraphクラスの列挙型として指定された特別なチャンネルインデックスを提供する必要があります。

チュートリアルのこの段階で、信号が変更されることなくグラフを通過するのが聞こえるはずです。

内蔵の入力と出力でプラグインをテストするときは、叫び声のようなフィードバックに注意してください。ヘッドフォンを使用することでこの問題を回避できます。

異なるプロセッサの実装

チュートリアルのこの部分では、チャンネルストリッププラグイン内で使用して入力オーディオ信号を変更できるさまざまなプロセッサを作成します。追加のプロセッサを作成したり、以下のプロセッサをお好みでカスタマイズしたりしてください。

作成したいさまざまなプロセッサのコードの繰り返しを避けるために、まず個々のプロセッサに継承されるAudioProcessor基底クラスを宣言し、簡略化のために必要な関数を一度だけオーバーライドしましょう。

class ProcessorBase : public juce::AudioProcessor

{

public:

//==============================================================================

ProcessorBase()

: AudioProcessor (BusesProperties().withInput ("Input", juce::AudioChannelSet::stereo()).withOutput ("Output", juce::AudioChannelSet::stereo()))

{

}

//==============================================================================

void prepareToPlay (double, int) override {}

void releaseResources() override {}

void processBlock (juce::AudioSampleBuffer&, juce::MidiBuffer&) override {}

//==============================================================================

juce::AudioProcessorEditor* createEditor() override { return nullptr; }

bool hasEditor() const override { return false; }

//==============================================================================

const juce::String getName() const override { return {}; }

bool acceptsMidi() const override { return false; }

bool producesMidi() const override { return false; }

double getTailLengthSeconds() const override { return 0; }

//==============================================================================

int getNumPrograms() override { return 0; }

int getCurrentProgram() override { return 0; }

void setCurrentProgram (int) override {}

const juce::String getProgramName (int) override { return {}; }

void changeProgramName (int, const juce::String&) override {}

//==============================================================================

void getStateInformation (juce::MemoryBlock&) override {}

void setStateInformation (const void*, int) override {}

private:

//==============================================================================

JUCE_DECLARE_NON_COPYABLE_WITH_LEAK_DETECTOR (ProcessorBase)

};

以下の3つのプロセッサは実装を容易にするためにDSPモジュールを使用します。DSPについてさらに学びたい場合は、チュートリアル:DSP入門を参照してください。

オシレーターの実装

最初のプロセッサは、440Hzの一定のサイン波トーンを生成するシンプルなオシレーターです。

OscillatorProcessorクラスを先に定義したProcessorBaseから派生させ、getName()関数をオーバーライドして意味のある名前を提供し、DSPモジュールからdsp::Oscillatorオブジェクトを宣言します:

class OscillatorProcessor : public ProcessorBase

{

public:

//...

const juce::String getName() const override { return "Oscillator"; }

private:

juce::dsp::Oscillator<float> oscillator;

};

コンストラクタでは、dsp::OscillatorオブジェクトのsetFrequency()およびinitialise()関数をそれぞれ呼び出して、オシレーターの周波数と波形を設定します:

OscillatorProcessor()

{

oscillator.setFrequency (440.0f);

oscillator.initialise ([] (float x) { return std::sin (x); });

}

prepareToPlay()関数では、サンプルレートとブロックあたりのサンプル数をdsp::Oscillatorオブジェクトに記述するためのdsp::ProcessSpecオブジェクトを作成し、prepare()関数を呼び出して仕様を渡します:

void prepareToPlay (double sampleRate, int samplesPerBlock) override

{

juce::dsp::ProcessSpec spec { sampleRate, static_cast<juce::uint32> (samplesPerBlock), 2 };

oscillator.prepare (spec);

}

次に、processBlock()関数では、引数として渡されたAudioSampleBufferからdsp::AudioBlockオブジェクトを作成し、そこから処理コンテキストをdsp::ProcessContextReplacingオブジェクトとして宣言し、dsp::Oscillatorオブジェクトのprocess()関数に渡します:

void processBlock (juce::AudioSampleBuffer& buffer, juce::MidiBuffer&) override

{

juce::dsp::AudioBlock<float> block (buffer);

juce::dsp::ProcessContextReplacing<float> context (block);

oscillator.process (context);

}

最後に、AudioProcessorのreset()関数をオーバーライドし、同じ関数を呼び出すことでdsp::Oscillatorオブジェクトの状態をリセットできます:

void reset() override

{

oscillator.reset();

}

これでチャンネルストリッププラグインで使用できるオシレーターができました。

演習:オシレーターのinitialise()関数を変更して異なる波形を生成し、ターゲット周波数を変更してください。

ゲインコントロールの実装

2番目のプロセッサは、入力信号を-6dB減衰させるシンプルなゲインコントロールです。

GainProcessorクラスを先に定義したProcessorBaseから派生させ、getName()関数をオーバーライドして意味のある名前を提供し、DSPモジュールからdsp::Gainオブジェクトを宣言します:

class GainProcessor : public ProcessorBase

{

public:

//...

const juce::String getName() const override { return "Gain"; }

private:

juce::dsp::Gain<float> gain;

};

コンストラクタでは、dsp::GainオブジェクトのsetGainDecibels()関数を呼び出して、ゲインコントロールのゲインをデシベルで設定します:

GainProcessor()

{

gain.setGainDecibels (-6.0f);

}

prepareToPlay()関数では、サンプルレート、ブロックあたりのサンプル数、およびチャンネル数をdsp::Gainオブジェクトに記述するためのdsp::ProcessSpecオブジェクトを作成し、prepare()関数を呼び出して仕様を渡します:

void prepareToPlay (double sampleRate, int samplesPerBlock) override

{

juce::dsp::ProcessSpec spec { sampleRate, static_cast<juce::uint32> (samplesPerBlock), 2 };

gain.prepare (spec);

}

次に、processBlock()関数では、引数として渡されたAudioSampleBufferからdsp::AudioBlockオブジェクトを作成し、そこから処理コンテキストをdsp::ProcessContextReplacingオブジェクトとして宣言し、dsp::Gainオブジェクトのprocess()関数に渡します:

void processBlock (juce::AudioSampleBuffer& buffer, juce::MidiBuffer&) override

{

juce::dsp::AudioBlock<float> block (buffer);

juce::dsp::ProcessContextReplacing<float> context (block);

gain.process (context);

}

最後に、AudioProcessorのreset()関数をオーバーライドし、同じ関数を呼び出すことでdsp::Gainオブジェクトの状態をリセットできます:

void reset() override

{

gain.reset();

}

これでチャンネルストリッププラグインで使用できるゲインコントロールができました。

演習:ゲインコントロールのsetGainDecibels()関数を変更して、ゲインをさらに下げるか、信号をブーストしてください。(ブースト時はレベルに注意!)

フィルターの実装

3番目のプロセッサは、1kHz未満の周波数を減衰させるシンプルなハイパスフィルターです。

FilterProcessorクラスを先に定義したProcessorBaseから派生させ、getName()関数をオーバーライドして意味のある名前を提供し、DSPモジュールからdsp::ProcessorDuplicatorオブジェクトを宣言します。これにより、dsp::IIR::Filterクラスのモノプロセッサを使用し、dsp::IIR::Coefficientsクラスとして共有状態を提供することでマルチチャンネルバージョンに変換できます:

class FilterProcessor : public ProcessorBase

{

public:

FilterProcessor() {}

//...

const juce::String getName() const override { return "Filter"; }

private:

juce::dsp::ProcessorDuplicator<juce::dsp::IIR::Filter<float>, juce::dsp::IIR::Coefficients<float>> filter;

};

prepareToPlay()関数では、まずmakeHighPass()関数を使用してフィルターに使用する係数を生成し、デュプリケーターに共有処理状態として割り当てます。次に、サンプルレート、ブロックあたりのサンプル数、およびチャンネル数をdsp::ProcessorDuplicatorオブジェクトに記述するためのdsp::ProcessSpecオブジェクトを作成し、prepare()関数を呼び出して仕様を渡します:

void prepareToPlay (double sampleRate, int samplesPerBlock) override

{

*filter.state = *juce::dsp::IIR::Coefficients<float>::makeHighPass (sampleRate, 1000.0f);

juce::dsp::ProcessSpec spec { sampleRate, static_cast<juce::uint32> (samplesPerBlock), 2 };

filter.prepare (spec);

}

次に、processBlock()関数では、引数として渡されたAudioSampleBufferからdsp::AudioBlockオブジェクトを作成し、そこから処理コンテキストをdsp::ProcessContextReplacingオブジェクトとして宣言し、dsp::ProcessorDuplicatorオブジェクトのprocess()関数に渡します:

void processBlock (juce::AudioSampleBuffer& buffer, juce::MidiBuffer&) override

{

juce::dsp::AudioBlock<float> block (buffer);

juce::dsp::ProcessContextReplacing<float> context (block);

filter.process (context);

}

最後に、AudioProcessorのreset()関数をオーバーライドし、同じ関数を呼び出すことでdsp::ProcessorDuplicatorオブジェクトの状態をリセットできます:

void reset() override

{

filter.reset();

}

これでチャンネルストリッププラグインで使用できるフィルターができました。

演習:フィルターの係数を変更して、異なるカットオフ周波数とレゾナンスを持つローパスまたはバンドパスフィルターを生成してください。

グラフノードの接続

AudioProcessorGraph内で使用できる複数のプロセッサを実装したので、ユーザーの選択に応じてそれらを接続し始めましょう。

TutorialProcessorクラスで、チャンネルストリップで選択されたパラメータとその対応するバイパス状態を保存するために、3つのAudioParameterChoiceと4つのAudioParameterBoolポインタをプライベートメンバー変数として追加します。また、後でグラフ内でインスタンス化されるときの3つのプロセッサスロットへのノードポインタを宣言し、便宜上選択可能な選択肢をStringArrayとして提供します。

juce::StringArray processorChoices { "Empty", "Oscillator", "Gain", "Filter" };

std::unique_ptr<juce::AudioProcessorGraph> mainProcessor;

juce::AudioParameterBool* muteInput;

juce::AudioParameterChoice* processorSlot1;

juce::AudioParameterChoice* processorSlot2;

juce::AudioParameterChoice* processorSlot3;

juce::AudioParameterBool* bypassSlot1;

juce::AudioParameterBool* bypassSlot2;

juce::AudioParameterBool* bypassSlot3;

Node::Ptr audioInputNode;

Node::Ptr audioOutputNode;

Node::Ptr midiInputNode;

Node::Ptr midiOutputNode;

Node::Ptr slot1Node;

Node::Ptr slot2Node;

Node::Ptr slot3Node;

//==============================================================================

JUCE_DECLARE_NON_COPYABLE_WITH_LEAK_DETECTOR (TutorialProcessor)

};

次にコンストラクタで、オーディオパラメータをインスタンス化し、addParameter()関数を呼び出してAudioProcessorにプラグインで利用可能にするパラメータを伝えます。

TutorialProcessor()

: AudioProcessor (BusesProperties().withInput ("Input", juce::AudioChannelSet::stereo(), true).withOutput ("Output", juce::AudioChannelSet::stereo(), true)),

mainProcessor (new juce::AudioProcessorGraph()),

muteInput (new juce::AudioParameterBool ("mute", "Mute Input", true)),

processorSlot1 (new juce::AudioParameterChoice ("slot1", "Slot 1", processorChoices, 0)),

processorSlot2 (new juce::AudioParameterChoice ("slot2", "Slot 2", processorChoices, 0)),

processorSlot3 (new juce::AudioParameterChoice ("slot3", "Slot 3", processorChoices, 0)),

bypassSlot1 (new juce::AudioParameterBool ("bypass1", "Bypass 1", false)),

bypassSlot2 (new juce::AudioParameterBool ("bypass2", "Bypass 2", false)),

bypassSlot3 (new juce::AudioParameterBool ("bypass3", "Bypass 3", false))

{

addParameter (muteInput);

addParameter (processorSlot1);

addParameter (processorSlot2);

addParameter (processorSlot3);

addParameter (bypassSlot1);

addParameter (bypassSlot2);

addParameter (bypassSlot3);

}

このチュートリアルでは、GenericAudioProcessorEditorクラスを使用します。これは、AudioParameterChoice型のプラグインプロセッサの各パラメータに対して自動的にComboBoxを作成し、AudioParameterBool型ごとにToggleButtonを作成します。

オーディオパラメータとそのカスタマイズ方法についてさらに学ぶには、チュートリアル:プラグインパラメータの追加を参照してください。パラメータの保存と読み込みのよりシームレスでエレガントな方法については、チュートリアル:プラグイン状態の保存と読み込みを見てください。

チュートリアルの最初の部分でAudioProcessorGraphをセットアップするときに、TutorialProcessorクラスのprocessBlock()コールバックでupdateGraph()ヘルパー関数を呼び出すことに気づきました。この関数の目的は、ユーザーが選択した現在の選択に応じて、適切なAudioProcessorオブジェクトとノードを再インスタンス化し、グラフを再接続することでグラフを更新することです。そのヘルパー関数を次のように実装しましょう:

void updateGraph()

{

bool hasChanged = false;

juce::Array<juce::AudioParameterChoice*> choices { processorSlot1,

processorSlot2,

processorSlot3 };

juce::Array<juce::AudioParameterBool*> bypasses { bypassSlot1,

bypassSlot2,

bypassSlot3 };

juce::ReferenceCountedArray<Node> slots;

slots.add (slot1Node);

slots.add (slot2Node);

slots.add (slot3Node);

関数はグラフの状態を表すローカル変数を宣言し、前回のオーディオブロック処理のイテレーション以降に変更されたかどうかを示します。また、プロセッサの選択、バイパス状態、およびグラフ内の対応するノードに対するイテレーションを容易にするための配列を作成します。

次の部分では、3つの利用可能なプロセッサスロットを反復処理し、各AudioParameterChoiceオブジェクトに対して選択されたオプションをチェックします:

for (int i = 0; i < 3; ++i)

{

auto& choice = choices.getReference (i);

auto slot = slots.getUnchecked (i);

if (choice->getIndex() == 0) // [1]

{

if (slot != nullptr)

{

mainProcessor->removeNode (slot.get());

slots.set (i, nullptr);

hasChanged = true;

}

}

else if (choice->getIndex() == 1) // [2]

{

if (slot != nullptr)

{

if (slot->getProcessor()->getName() == "Oscillator")

continue;

mainProcessor->removeNode (slot.get());

}

slots.set (i, mainProcessor->addNode (std::make_unique<OscillatorProcessor>()));

hasChanged = true;

}

else if (choice->getIndex() == 2) // [3]

{

if (slot != nullptr)

{

if (slot->getProcessor()->getName() == "Gain")

continue;

mainProcessor->removeNode (slot.get());

}

slots.set (i, mainProcessor->addNode (std::make_unique<GainProcessor>()));

hasChanged = true;

}

else if (choice->getIndex() == 3) // [4]

{

if (slot != nullptr)

{

if (slot->getProcessor()->getName() == "Filter")

continue;

mainProcessor->removeNode (slot.get());

}

slots.set (i, mainProcessor->addNode (std::make_unique<FilterProcessor>()));

hasChanged = true;

}

}

- [1]:選択が「Empty」状態のままの場合、まずノードが以前に別のプロセッサにインスタンス化されていたかどうかを確認し、そうであればグラフからノードを削除し、ノードへの参照をクリアし、

hasChangedフラグをtrueに設定します。そうでなければ、状態は変更されておらず、グラフの再構築は必要ありません。 - [2]:ユーザーが「Oscillator」状態を選択した場合、まず現在インスタンス化されているノードがすでにオシレータープロセッサであるかどうかを確認し、そうであれば状態は変更されておらず、次のスロットに進みます。そうでなければ、スロットがすでに占有されている場合はグラフからノードを削除し、オシレーターをインスタンス化して新しいノードへの参照を設定し、

hasChangedフラグをtrueに設定します。 - [3]:「Gain」状態についても同じことを行い、必要に応じてゲインプロセッサをインスタンス化します。

- [4]:同様に、「Filter」状態についても同じプロセスを繰り返し、必要に応じてフィルタープロセッサをインスタンス化します。

次のセクションは、グラフの状態が変更された場合にのみ実行され、ノードの接続を開始します:

if (hasChanged)

{

for (auto connection : mainProcessor->getConnections()) // [5]

mainProcessor->removeConnection (connection);

juce::ReferenceCountedArray<Node> activeSlots;

for (auto slot : slots)

{

if (slot != nullptr)

{

activeSlots.add (slot); // [6]

slot->getProcessor()->setPlayConfigDetails (getMainBusNumInputChannels(),

getMainBusNumOutputChannels(),

getSampleRate(),

getBlockSize());

}

}

if (activeSlots.isEmpty()) // [7]

{

connectAudioNodes();

}

else

{

for (int i = 0; i < activeSlots.size() - 1; ++i) // [8]

{

for (int channel = 0; channel < 2; ++channel)

mainProcessor->addConnection ({ { activeSlots.getUnchecked (i)->nodeID, channel },

{ activeSlots.getUnchecked (i + 1)->nodeID, channel } });

}

for (int channel = 0; channel < 2; ++channel) // [9]

{

mainProcessor->addConnection ({ { audioInputNode->nodeID, channel },

{ activeSlots.getFirst()->nodeID, channel } });

mainProcessor->addConnection ({ { activeSlots.getLast()->nodeID, channel },

{ audioOutputNode->nodeID, channel } });

}

}

connectMidiNodes();

for (auto node : mainProcessor->getNodes()) // [10]

node->getProcessor()->enableAllBuses();

}

- [5]:まず、ブランク状態から開始するためにグラフ内のすべての接続を削除します。

- [6]:次に、スロットを反復処理し、グラフ内にAudioProcessorノードがあるかどうかを確認します。ある場合は、アクティブノードの一時配列にノードを追加し、将来の処理のためにノードを準備するために、チャンネル、サンプルレート、ブロックサイズ情報を使用して対応するプロセッサインスタンスのsetPlayConfigDetails()関数を呼び出します。

- [7]:次に、アクティブスロットが見つからない場合、これはすべての選択が「Empty」状態であり、オーディオI/Oプロセッサノードを単純に一緒に接続できることを意味します。

- [8]:そうでなければ、オーディオI/Oプロセッサノードの間にあるべきノードが少なくとも1つあることを意味します。したがって、スロット番号の昇順でアクティブスロットを一緒に接続し始めることができます。ここで、必要な接続のペア数はアクティブスロット数マイナス1だけであることに注意してください。

- [9]:その後、オーディオ入力プロセッサノードをチェーン内の最初のアクティブスロットに、最後のアクティブスロットをオーディオ出力プロセッサノードにリンクしてグラフの接続を完了します。

- [10]:最後に、MIDI I/Oノードを接続し、オーディオプロセッサのすべてのバスが有効になっていることを確認します。

for (int i = 0; i < 3; ++i)

{

auto slot = slots.getUnchecked (i);

auto& bypass = bypasses.getReference (i);

if (slot != nullptr)

slot->setBypassed (bypass->get());

}

audioInputNode->setBypassed (muteInput->get());

slot1Node = slots.getUnchecked (0);

slot2Node = slots.getUnchecked (1);

slot3Node = slots.getUnchecked (2);

}

updateGraph()ヘルパー関数の最後のセクションでは、スロットがアクティブかどうかを確認し、チェックボックスがトグルされている場合はAudioProcessorをバイパスして、プロセッサのバイパス状態を処理します。また、テスト時のフィードバックループを避けるために入力をミュートするかどうかもチェックします。その後、次のイテレーションのために新しく作成されたノードを対応するスロットに割り当てます。

プラグインはグラフ内のロードされたプロセッサを通じて入力オーディオを処理して実行されるはずです。

演習:お好みの追加プロセッサノードを作成し、AudioProcessorGraphに追加してください。(例えば、MIDIメッセージを処理するプロセッサなど。)

プラグインをアプリケーションに変換

スタンドアロンアプリ内でAudioProcessorGraphを使用することに興味がある場合、このオプションのセクションでこれについて詳しく説明します。

まず、メインのTutorialProcessorクラスをAudioProcessorの代わりにComponentのサブクラスに変換する必要があります。他のJUCE GUIアプリケーションの命名規則に合わせるために、クラス名をMainComponentに変更します:

class MainComponent : public juce::Component,

private juce::Timer

{

public:

PIPファイルを使用してこのチュートリアルに従っている場合は、「mainClass」と「type」フィールドを変更を反映するように変更し、「dependencies」フィールドを適切に修正してください。プロジェクトのZIPバージョンを使用している場合は、Main.cppファイルが「GUI Application」テンプレート形式に従っていることを確認してください。

プラグインを作成するとき、すべてのIOデバイス管理と再生機能はホストによって制御されるため、これらのセットアップについて心配する必要はありません。しかし、スタンドアロンアプリケーションでは、これを自分で管理する必要があります。そのため、AudioProcessorGraphとシステムで利用可能なオーディオIOデバイス間の通信を可能にするために、MainComponentクラスにAudioDeviceManagerとAudioProcessorPlayerをプライベートメンバー変数として宣言します。

juce::AudioDeviceManager deviceManager;

juce::AudioProcessorPlayer player;

AudioDeviceManagerはすべてのプラットフォームでオーディオおよびMIDIデバイスを管理する便利なクラスで、AudioProcessorPlayerはAudioProcessorGraphを通じた簡単な再生を可能にします。

コンストラクタでは、プラグインパラメータを初期化する代わりに、通常のGUIコンポーネントを作成し、AudioDeviceManagerとAudioProcessorPlayerを初期化します:

MainComponent()

: mainProcessor (new juce::AudioProcessorGraph())

{

auto inputDevice = juce::MidiInput::getDefaultDevice();

auto outputDevice = juce::MidiOutput::getDefaultDevice();

mainProcessor->enableAllBuses();

deviceManager.initialiseWithDefaultDevices (2, 2); // [1]

deviceManager.addAudioCallback (&player); // [2]

deviceManager.setMidiInputDeviceEnabled (inputDevice.identifier, true);

deviceManager.addMidiInputDeviceCallback (inputDevice.identifier, &player); // [3]

deviceManager.setDefaultMidiOutputDevice (outputDevice.identifier);

initialiseGraph();

player.setProcessor (mainProcessor.get()); // [4]

setSize (600, 400);

startTimer (100);

}

ここでは、まずデフォルトのオーディオデバイスと各2つの入力と出力でデバイスマネージャーを初期化します[1]。次にAudioProcessorPlayerをデバイスマネージャーにオーディオコールバックとして追加し[2]、デフォルトのMIDIデバイスを使用してMIDIコールバックとして追加します[3]。グラフの初期化後、AudioProcessorPlayerのsetProcessor()関数を呼び出してAudioProcessorGraphを再生するプロセッサとして設定できます[4]。

~MainComponent() override

{

auto device = juce::MidiInput::getDefaultDevice();

deviceManager.removeAudioCallback (&player);

deviceManager.setMidiInputDeviceEnabled (device.identifier, false);

deviceManager.removeMidiInputDeviceCallback (device.identifier, &player);

}

デストラクタでは、アプリケーションのシャットダウン時にAudioProcessorPlayerをオーディオおよびMIDIコールバックとして削除することを確認します。

プラグインの実装とは異なり、AudioProcessorPlayerが自動的にオーディオの処理を行うため、AudioProcessorGraphのprepareToPlay()およびprocessBlock()関数を呼び出す必要がありません。

しかし、ユーザーがパラメータを変更したときにグラフを更新する方法を見つける必要があり、MainComponentをTimerクラスから派生させ、timerCallback()関数をオーバーライドすることでこれを行います:

void timerCallback() override { updateGraph(); }

タイマーコールバックを使用することは最も効率的な解決策ではなく、一般的に適切なコンポーネントにリスナーとして登録することがベストプラクティスです。

最後に、updateGraph()関数を変更して、スタンドアロンアプリのシナリオでは後者がAudioProcessorPlayerに置き換えられたため、メインのAudioProcessorの代わりにAudioProcessorGraphから再生設定の詳細を設定します:

for (auto slot : slots)

{

if (slot != nullptr)

{

activeSlots.add (slot);

slot->getProcessor()->setPlayConfigDetails (mainProcessor->getMainBusNumInputChannels(),

mainProcessor->getMainBusNumOutputChannels(),

mainProcessor->getSampleRate(),

mainProcessor->getBlockSize());

}

}

これらの変更後、プラグインはアプリケーションとして実行されるはずです。

繰り返しになりますが、内蔵の入力と出力でアプリをテストするときは、叫び声のようなフィードバックに注意してください。ヘッドフォンを使用することでこの問題を回避できます。

このプラグインの修正版のソースコードは、デモプロジェクトのAudioProcessorGraphTutorial_02.hファイルにあります。

まとめ

このチュートリアルでは、AudioProcessorGraphを操作してプラグインのエフェクトをカスケードする方法を学びました。特に以下のことを行いました:

- 一連のオーディオプロセッサを持つチャンネルストリップを構築しました。

- AudioProcessorGraphでノードを接続する方法を学びました。

- AudioProcessorPlayerを使用してグラフからスタンドアロンアプリを作成しました。