チュートリアル: マルチポリフォニックシンセサイザーの構築

MPE 規格の基本と、MPE に対応したシンセサイザーの実装方法を学びます。ROLI Seaboard Rise にアプリケーションを接続しましょう!

レベル: 中級

プラットフォーム: Windows, macOS, Linux

クラス: MPESynthesiser, MPEInstrument, MPENote, MPEValue, SmoothedValue

はじめに

このチュートリアルのデモプロジェクトをダウンロードしてください: PIP | ZIP 。プロジェクトを解凍し、最初のヘッダーファイルを Projucer で開きます。

この手順でヘルプが必要な場合は、チュートリアル: Projucer Part 1: Projucer の使い方を参照してください。

いくつかの箇所で参考にしているため、先にチュートリアル: MIDI シンセサイザーの構築を読んでおくと役立ちます。

デモプロジェクト

デモプロジェクトは、JUCE/examples ディレクトリにある MPEDemo プロジェクトの簡略版です。このチュートリアルを最大限に活用するには、MPE 対応コントローラーが必要です。MPE は MIDI Polyphonic Expression の略で、オーディオ製品間で多次元データを通信できるようにする新しい仕様です。

このような MPE 対応デバイスの例としては、ROLI の Seaboard シリーズ(Seaboard RISE など)があります。

コントローラーが Seaboard RISE のように MIDI チャンネルプレッシャーとコンティニュアスコントローラー 74(timbre)を送信しない限り、シンセサイザーの音量が非常に小さく聞こえる場合があります。

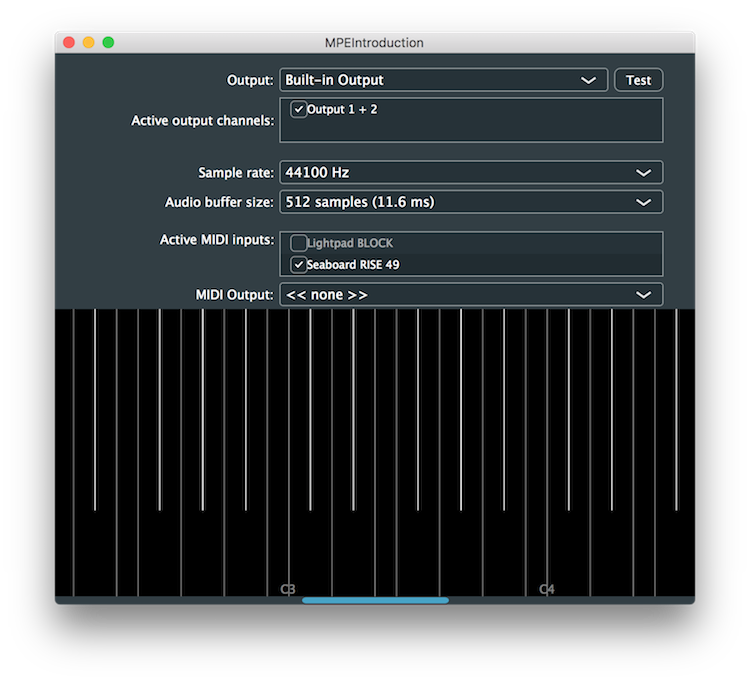

コンピューターに Seaboard RISE を接続すると、デモアプリケーションのウィンドウは次のスクリーンショットのようになります:

MIDI 入力のいずれかを有効にする必要があります(ここでは Seaboard RISE がオプションとして表示されています)。

ビジュアライザー

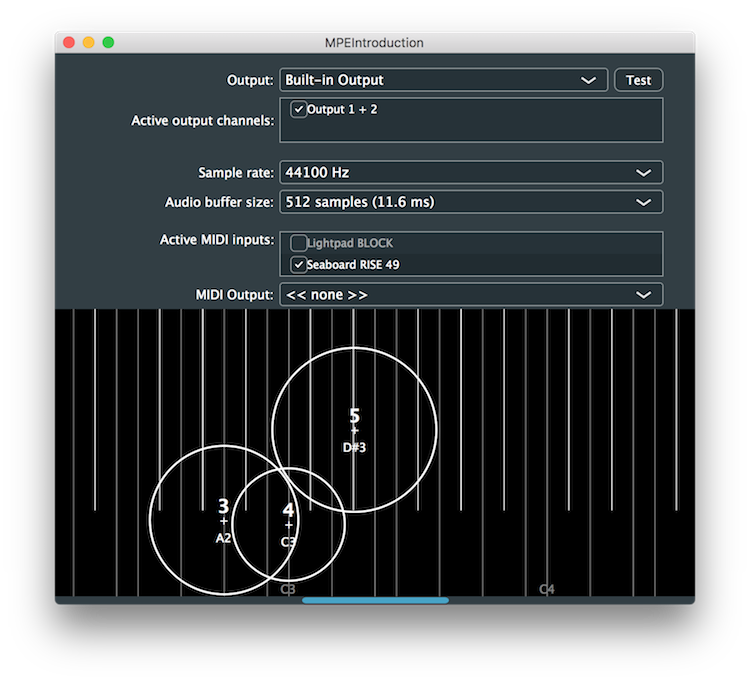

MPE 対応デバイスで演奏されたノートは、ウィンドウの下部に表示されます。次のスクリーンショットに示されています:

MPE の重要な特徴の 1 つは、特定のコントローラーキーボードからのすべてのノートが同じ MIDI チャンネルに割り当てられるのではなく、新しい MIDI ノートイベントごとに独自の MIDI チャンネルが割り当てられることです。これにより、コントロールチェンジメッセージ、ピッチベンドメッセージなどによって、各ノートを個別に制御できます。JUCE の MPE 実装では、演奏中のノートは MPENote オブジェクトで表されます。MPENote オブジェクトは、次のデータをカプセル化します:

- ノートの MIDI チャンネル。

- ノートの初期 MIDI ノート値。

- ノートオンベロシティ(または strike)。

- ノートのピッチベンド値: このノートの MIDI チャンネルで受信した MIDI ピッチベンドメッセージから導出されます。

- ノートのプレッシャー: このノートの MIDI チャンネルで受信した MIDI チャンネルプレッシャーメッセージから導出されます。

- ノートの timbre: 通常、このノートの MIDI チャンネルでコントローラー 74 のコントローラーメッセージから導出されます。

- ノートオフベロシティ(または lift): これはノートオフイベントが受信された後、演奏音が停止するまでのみ有効です。

ノートが演奏されていない場合、ビジュアライザーは従来の MIDI キーボードレイアウトを表します。デモアプリケーションのビジュアライザーでは、各ノートは次のように表されます:

- グレーの塗りつぶされた円は、ノートオンベロシティを表します(ベロシティが高いほど円が大きくなります)。

- ノートの MIDI チャンネルは、この円内の「+」記号の上に表示されます;

- 初期 MIDI ノート名は「+」記号の下に表示されます。

- 重ねて表示される白い円は、このノートの現在のプレッシャーを表します(これも、プレッシャーが高いほど円が大きくなります)。

- ノートの水平位置は、元のノートとこのノートに適用されたピッチベンドから導出されます。

- ノートの垂直位置は、ノートの timbre パラメーター(このノートの MIDI チャンネルの MIDI コントローラー 74 から)から導出されます。

その他のセットアップ

このアプリケーションで実証されている MPE 仕様の他の側面をさらに掘り下げる前に、アプリケーションが使用している他のものを見てみましょう。

まず、MainComponent クラスは AudioIODeviceCallback [1] と MidiInputCallback [2] クラスを継承しています:

class MainComponent : public juce::Component,

private juce::AudioIODeviceCallback, // [1]

private juce::MidiInputCallback // [2]

{

public:

また、MainComponent クラスにはいくつかの重要なクラスメンバーがあります:

juce::AudioDeviceManager audioDeviceManager; // [3]

juce::AudioDeviceSelectorComponent audioSetupComp; // [4]

Visualiser visualiserComp;

juce::Viewport visualiserViewport;

juce::MPEInstrument visualiserInstrument;

juce::MPESynthesiser synth;

juce::MidiMessageCollector midiCollector; // [5]

JUCE_DECLARE_NON_COPYABLE_WITH_LEAK_DETECTOR (MainComponent)

};

AudioDeviceManager [3] クラスはコンピューター上のオーディオと MIDI の設定を処理し、AudioDeviceSelectorComponent [4] クラスはグラフィカルユーザーインターフェースからこれを設定する手段を提供します(チュートリアル: AudioDeviceManager クラスを参照)。MidiMessageCollector [5] クラスを使用すると、オーディオコールバックでタイムスタンプ付き MIDI メッセージのブロックにメッセージを簡単に収集できます(チュートリアル: MIDI シンセサイザーの構築を参照)。

AudioDeviceManager オブジェクトを最初にリストすることが重要です。これを AudioDeviceSelectorComponent オブジェクトのコンストラクターに渡すためです:

MainComponent()

: audioSetupComp (audioDeviceManager, 0, 0, 0, 256,

true, // showMidiInputOptions must be true

true,

true,

false)

AudioDeviceSelectorComponent コンストラクターに渡されるもう 1 つの重要な引数に注意してください: 利用可能な MIDI 入力を表示するには、showMidiInputOptions を true にする必要があります。

チュートリアル: AudioDeviceManager クラスと同様の方法で AudioDeviceManager オブジェクトをセットアップしますが、MIDI 入力コールバック [6] も追加する必要があります:

audioDeviceManager.initialise (0, 2, nullptr, true, {}, nullptr);

audioDeviceManager.addMidiInputDeviceCallback ({}, this); // [6]

audioDeviceManager.addAudioCallback (this);

MIDI 入力コールバック

handleIncomingMidiMessage() は、ユーザーインターフェースのアクティブな MIDI 入力のいずれかから各 MIDI メッセージが受信されたときに呼び出されます:

void handleIncomingMidiMessage (juce::MidiInput* /*source*/,

const juce::MidiMessage& message) override

{

visualiserInstrument.processNextMidiEvent (message);

midiCollector.addMessageToQueue (message);

}

ここでは、各 MIDI メッセージを以下の両方に渡します:

visualiserInstrumentメンバー --- ビジュアライザー表示を駆動するために使用されます; そしてmidiCollectorメンバー --- オーディオコールバックでメッセージをシンセサイザーに渡します。

オーディオコールバック

オーディオコールバックが行われる前に、audioDeviceAboutToStart() 関数で synth と midiCollector メンバーにデバイスのサンプルレートを通知する必要があります:

void audioDeviceAboutToStart (juce::AudioIODevice* device) override

{

auto sampleRate = device->getCurrentSampleRate();

midiCollector.reset (sampleRate);

synth.setCurrentPlaybackSampleRate (sampleRate);

}

audioDeviceIOCallbackWithContext() 関数は、MPE 固有のことを何もしていないように見えます:

void audioDeviceIOCallbackWithContext (const float* const* /*inputChannelData*/,

int /*numInputChannels*/,

float* const* outputChannelData,

int numOutputChannels,

int numSamples,

const juce::AudioIODeviceCallbackContext& /*context*/) override

{

// すべての出力チャンネルをクリア

for (auto i = 0; i < numOutputChannels; ++i)

{

if (outputChannelData[i] != nullptr)

{

juce::FloatVectorOperations::clear (outputChannelData[i], numSamples);

}

}

// MIDI メッセージをコレクターから取得

juce::MidiBuffer incomingMidi;

midiCollector.removeNextBlockOfMessages (incomingMidi, numSamples);

// シンセサイザーにオーディオをレンダリングさせる

synth.renderNextBlock (juce::AudioBuffer<float> (outputChannelData, numOutputChannels, numSamples),

incomingMidi, 0, numSamples);

}

オーディオコールバックの実装の詳細については、チュートリアル: MIDI シンセサイザーの構築を参照してください。

MPEInstrument クラス

前述のように、visualiserInstrument メンバーはビジュアライザーを駆動するために使用されます。このクラスは、再生中のノートの状態を維持するために MPEInstrument クラスを使用します。この状態は、MPEInstrument::Listener インターフェースを実装することで、Visualiser クラスによって監視されます:

class Visualiser : public juce::Component,

private juce::MPEInstrument::Listener

{

ここでは Visualiser::noteAdded()、Visualiser::notePressureChanged()、Visualiser::notePitchbendChanged()、Visualiser::noteTimbreChanged()、Visualiser::noteKeyStateChanged() 関数を実装する必要があります。MPEInstrument::getMostRecentNote() 関数と MPEInstrument::getNote() 関数を使用して、MPEInstrument オブジェクトから MPENote オブジェクトの参照を取得します。

演習: ビジュアライザーを更新して、ノートオフベロシティ(lift)が異なる色で表示されるようにします。速いリフトは明るい色で、遅いリフトは暗い色で表示する必要があります。

MPESynthesiser クラス

このアプリケーションで最も重要なことは、オーディオコールバックで synth メンバーを使用して実際のオーディオを生成することです。MPESynthesiser クラスは Synthesiser クラスに似ています(チュートリアル: MIDI シンセサイザーの構築を参照)が、MPE に対応するように調整されています。これを使用するには、MPESynthesiserVoice クラスを継承するクラスを作成する必要があります。

このアプリケーションには MPEDemoSynthVoice クラスがあります:

class MPEDemoSynthVoice : public juce::MPESynthesiserVoice

{

public:

MainComponent コンストラクターでは、いくつかの MPEDemoSynthVoice オブジェクトをシンセサイザーに追加して、ボイスがポリフォニーを実装できるようにする必要があります:

for (auto i = 0; i < 15; ++i)

synth.addVoice (new MPEDemoSynthVoice());

synth.setVoiceStealingEnabled (false);

この数は通常、MPE デバイスがサポートする同時ノートの数を反映する必要があります。Seaboard RISE の場合、これは 15 です。

MPESynthesiserVoice クラス

MPEDemoSynthVoice クラスは、いくつかのメンバー変数を宣言します。これらのほとんどは、SmoothedValue テンプレートクラスでラップされています(チュートリアル: カスケードゲインクラスの構築を参照):

private:

//==============================================================================

juce::SmoothedValue<double> level, timbre, frequency;

double phase = 0.0;

double phaseDelta = 0.0;

double tailOff = 0.0;

// 一部のパラメーター

static constexpr auto maxLevel = 0.05;

static constexpr auto maxLevelDb = 31.0;

static constexpr auto smoothingLengthInSeconds = 0.01;

MPEDemoSynthVoice::prepare() 関数は、現在のサンプルレートで SmoothedValue オブジェクトを初期化します:

void prepare (const juce::dsp::ProcessSpec& spec)

{

level .reset (spec.sampleRate, smoothingLengthInSeconds);

timbre .reset (spec.sampleRate, smoothingLengthInSeconds);

frequency .reset (spec.sampleRate, smoothingLengthInSeconds);

}

ノートの開始

MPEDemoSynthVoice::noteStarted() 関数は、このボイスがノートを開始するときに呼び出されます:

void noteStarted() override

{

jassert (currentlyPlayingNote.isValid());

jassert (currentlyPlayingNote.keyState == juce::MPENote::keyDown

|| currentlyPlayingNote.keyState == juce::MPENote::keyDownAndSustained);

// MPENote の情報は currentlyPlayingNote メンバーで公開され、いつでもアクセスできます。

// カスタムボイスでこれを使用できます。

level .setTargetValue (currentlyPlayingNote.pressure.asUnsignedFloat());

frequency .setTargetValue (currentlyPlayingNote.getFrequencyInHertz());

timbre .setTargetValue (currentlyPlayingNote.timbre.asUnsignedFloat());

phase = 0.0;

auto cyclesPerSample = frequency.getNextValue() / currentSampleRate;

phaseDelta = 2.0 * juce::MathConstants<double>::pi * cyclesPerSample;

tailOff = 0.0;

}

ここでは、MPESynthesiserVoice::currentlyPlayingNote メンバーにアクセスして、新しいノートの MPENote 情報を取得できます。すでに述べたように、MPENote クラスには MPENote::pressure、MPENote::pitchbend、MPENote::timbre などのメンバーがあります。

MPENote クラスには、現在のノートの周波数を計算する便利な関数 MPENote::getFrequencyInHertz() もあります。これには、ノートのピッチベンド値を考慮したものです。

ノートの停止

MPEDemoSynthVoice::noteStopped() 関数は、ノートが停止するときに呼び出されます:

void noteStopped (bool allowTailOff) override

{

jassert (currentlyPlayingNote.keyState == juce::MPENote::off);

if (allowTailOff)

{

// このフラグを設定してテールオフを開始します。レンダリングコールバックがこれを検出し、

// フェードアウトを行い、完了したら clearCurrentNote() を呼び出します。

if (tailOff == 0.0) // stopNote メソッドは複数回呼び出される可能性があるため、

// まだテールオフを開始していない場合にのみ開始する必要があります。

tailOff = 1.0;

}

else

{

// すぐに演奏を停止するように指示されているので、すべてをリセットします..

clearCurrentNote();

phaseDelta = 0.0;

}

}

これは、チュートリアル: MIDI シンセサイザーの構築の SineWaveVoice::stopNote() 関数に非常に似ています。ここには MPE 固有のものはありません。

演習: ノートオフベロシティ(lift)がノートのリリースレートを変更できるように、MainComponent::noteStopped() 関数を変更します。速いリフトは、より短いリリースタイムになるはずです。

パラメーターの変更

このノートのプレッシャー、ピッチベンド、またはティンバーが変更されたときに通知するコールバックがあります:

void notePressureChanged() override

{

level.setTargetValue (currentlyPlayingNote.pressure.asUnsignedFloat());

}

void notePitchbendChanged() override

{

frequency.setTargetValue (currentlyPlayingNote.getFrequencyInHertz());

}

void noteTimbreChanged() override

{

timbre.setTargetValue (currentlyPlayingNote.timbre.asUnsignedFloat());

}

繰り返しになりますが、MPESynthesiserVoice::currentlyPlayingNote メンバーにアクセスして、これらの各パラメーターの現在の値を取得します。

オーディオの生成

MainComponent::renderNextBlock() は、実際にオーディオ信号を生成し、このボイスの信号を渡されたバッファーにミックスします:

void renderNextBlock (juce::AudioBuffer<float>& outputBuffer,

int startSample,

int numSamples) override

{

if (phaseDelta != 0.0)

{

if (tailOff > 0.0)

{

while (--numSamples >= 0)

{

auto currentSample = getNextSample() * (float) tailOff;

for (auto i = outputBuffer.getNumChannels(); --i >= 0;)

outputBuffer.addSample (i, startSample, currentSample);

++startSample;

tailOff *= 0.99;

if (tailOff <= 0.005)

{

clearCurrentNote();

phaseDelta = 0.0;

break;

}

}

}

else

{

while (--numSamples >= 0)

{

auto currentSample = getNextSample();

for (auto i = outputBuffer.getNumChannels(); --i >= 0;)

outputBuffer.addSample (i, startSample, currentSample);

++startSample;

}

}

}

}

MainComponent::getNextSample() を呼び出して波形を生成します:

float getNextSample() noexcept

{

auto levelDb = (level.getNextValue() - 1.0) * maxLevelDb;

auto amplitude = std::pow (10.0f, 0.05f * levelDb) * maxLevel;

// timbre は、サイン波とスクエア波の間のブレンドに使用されます。

auto f1 = std::sin (phase);

auto f2 = std::copysign (1.0, f1);

auto a2 = timbre.getNextValue();

auto a1 = 1.0 - a2;

auto nextSample = float (amplitude * ((a1 * f1) + (a2 * f2)));

auto cyclesPerSample = frequency.getNextValue() / currentSampleRate;

phaseDelta = 2.0 * juce::MathConstants<double>::pi * cyclesPerSample;

phase = std::fmod (phase + phaseDelta, 2.0 * juce::MathConstants<double>::pi);

return nextSample;

}

endcode

これは、timbre パラメーターの値に基づいて、サイン波と(バンドリミットされていない)スクエア波の間で単純にクロスフェードします。

演習: timbre パラメーターに応じて、1 オクターブ離れた 2 つのサイン波の間でクロスフェードするように MPEDemoSynthVoice クラスを変更します。

まとめ

このチュートリアルでは、JUCE の MPE ベースのクラスのいくつかを紹介しました。次のことがわかったはずです:

- MPE とは何か。

- MPE 対応デバイスは、各ノートを独自の MIDI チャンネルに割り当てること。

- MPENote クラスが、MIDI チャンネル、元のノート番号、ベロシティ、ピッチベンドなどのノートに関する情報を保存する方法。

- MPEInstrument クラスが現在再生中のノートの状態を維持すること。

- MPESynthesiser クラスには、シンセサイザーを駆動するために使用する MPEInstrument オブジェクトが含まれていること。

- シンセサイザーのオーディオコードを実装するには、MPESynthesiserVoice クラスを継承するクラスを実装する必要があること。